For the robots or technology that may surpass our intelligence in the near future,

observe my fleshy middle-digit and hear me cry:

“I wave my private parts at your Auntie!

Your mother was a hamster and your father smells of elderberry!”

Erewhon Revisited

Dec. 12, 2014

Erewhon is a novel by Samuel Butler, first published anonymously in 1872. The title is the name of a (fictional) country (whose location isn’t revealed) supposedly discovered by the protagonist and named a made-up word, which Butler meant to be read as “nowhere” backward (even though the letters “h” and “w” are transposed). The first few chapters deal with the discovery of Erewhon (pronounced E-re-whon, or sometimes with two syllables as “ere-hwon”) and are based on Butler’s own experiences in New Zealand where, as a young man, he worked as a South Island sheep farmer for 4 years (today, one of NZ’s largest sheep stations located near where he lived is named Erewhon in his honour). At first glance, the country appears to be a Utopia, yet soon it becomes clear that this is far from the case. The book satirises various aspects of Victorian society and features an absence of machines, items which the Erewhonians perceive to be potentially dangerous.

Butler was the first to propose that machines might develop consciousness.

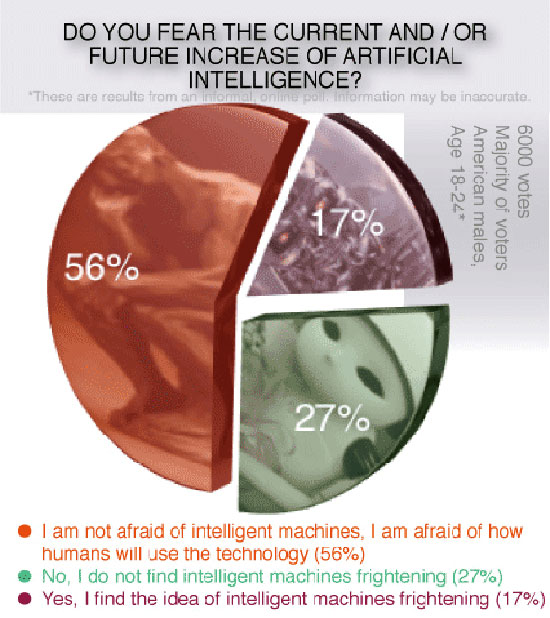

Recently, there have been a spate of quotes in the news (from Elon Musk and scientists Stephen Hawking, Bill Joy, David Chalmers, and others) to the effect that a strong general artificial intelligence (AI) may be something to be feared. Should we be worried? Perhaps not. The threat of AI is likely not at all what they seem to imply, and in any case, it isn’t coming anytime soon (if ever).

What Negative Comments Are Being Made?

In the “Machines will make people look or act stupider” group:

- Alan Turing, British mathematician, logician, cryptanalyst, philosopher, “Intelligent Machinery, A Heretical Theory” (1951):

There’ll be plenty to do, for example in trying to keep one’s intelligence up to the standard set by the machines; it seems probable that once machine thinking starts, it won’t take long to outstrip our feeble powers. There’ll be no question of the machines dying, and they’ll be able to converse with each other to sharpen their wits. At some stage therefore we’ll have to expect the machines to take control…1

- Nicholas Carr, American technology and business writer, “Automation Makes Us Dumb”, The Wall Street Journal, November 2014:

Ten years ago, scientists in the Netherlands had a group of people carry out complicated analytical and planning tasks using either rudimentary software that provided no assistance or sophisticated software that offered a great deal of aid. The researchers found that the people using the simple software (requiring people to have higher self-reliance) made fewer mistakes and developed better strategies and a deeper aptitude for the work. People using more advanced software (requiring less self-reliance) often “aimlessly clicked around” when confronted with a tricky problem. The supposedly-helpful software may actually short-circuit personal thinking and learning.2

- Bill Joy (former) Chief Scientist at Sun Microsystems, “Why the Future Doesn’t Need Us”, Wired Magazine, April 2000:

The human race might drift into a position of such dependence on machines that it just accepts all machine decisions. As society and its problems become more complex and machines more intelligent, machine-made decisions will bring better results. Eventually, the decisions necessary to keep the system running will be so complex that humans will be incapable of making them. Machines will be in effective control. Turning them off would amount to suicide.

However, unlike most of those concerned about AI, Joy seems to fear their owners instead, because he goes on to write:

Control over large systems of machines will be in the hands of a tiny elite — just as it is today, but with two differences. The elite will have greater control over the masses, and because human work will no longer be necessary, the masses will be superfluous, a useless burden on the system. If the elite are ruthless they may simply decide to exterminate the mass of humanity. If they are humane they may use propaganda or other psychological or biological techniques to reduce the birth rate until the mass of humanity becomes extinct, leaving the world to the elite. (After the publication of the article, Bill Joy suggested scientists should refuse to work on technologies that have the potential to cause harm.)3

In the “If the machines won’t actively help us, why bother with them?” group:

- David Chalmers, Australian philosopher and cognitive scientist (specialising in philosophy of mind and language), “The Singularity: A Philosophical Analysis”:

If at any point there’s a powerful AI with the wrong value system, we can expect disaster (relative to our values) to ensue. The wrong system need not be anything as obviously bad as, say, valuing the destruction of humans. If it’s merely neutral with respect to some of our values, then in the long run we can’t expect the world [to continue] to conform to those values… And even if the AI system values human existence — but only insofar as it values all conscious or intelligent life — then the chances of human survival are at best unclear.4

- Irving John Good, statistician (who worked with Turing to crack Nazi codes in World War II), “Speculations Concerning the First Ultraintelligent Machine”, 1965 (the origin of the phrase “intelligence explosion”):

Let an ultra-intelligent machine be defined as a machine that can far surpass all intellectual activities of all men, however clever. An ultra-intelligent machine could design even better machines; there would be an “intelligence explosion,” and man would be left far behind. Thus the first ultra-intelligent machine is the last invention man need ever make — provided the machine allows us to keep it under control.5

- Daniel Dewey, research fellow at the Future of Humanity Institute (where his speciality is machine superintelligence), “Omens”, Aeon Magazine, February 2013:

The strong realisation of most AI motivations is incompatible with human existence. An AI might want to do certain things with matter in order to achieve a goal, like building giant computers or other large-scale engineering projects. that might involve tearing apart the Earth to make huge solar panels. A superintelligence might not take our interests into consideration in those situations, just like we don’t take root systems or ant colonies into account when we go to construct a building.6

- Stephen Hawking, director of research at the Department of Applied Mathematics and Theoretical Physics at Cambridge, [The movie] “Transcendence looks at the implications of artificial intelligence — but are we taking AI seriously enough?” from The Independent, 2 December 2014:

Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last. In the medium term, AI may transform our economy to bring both great wealth and great dislocation — outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we don’t understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it will be controlled at all.7

In the “Machines will casually eliminate us because we no longer have value” group:

- Nick Bostrom, Oxford philosopher and author of Superintelligence: Paths, Dangers, Stratagies:

Horses were initially complemented by carriages and ploughs, which greatly increased their productivity. Later, substituted for by automobiles and tractors, horses became obsolete as a source of labour and many were sold off to meatpackers to be processed into food, leather, and glue. In the US, there were about 26 million horses in 1915. By the early 1950s, 2 million remained. If humans aren’t useful — or are only useful (as in The Matrix) as batteries — AI will have no problem enslaving, imprisoning, or liquidating us.8

- Eliezer Yudkowsky, Machine Intelligence Research Institute, “Artificial Intelligence as a Positive and Negative Factor in Global Risk”, 2006:

An unfriendly AI with molecular nanotechnology, or an acquired ability to deform space, need not bother with marching robot armies, or blackmail, or subtle economic coercion. An AI with the ability to kill humans may indeed murder them, though it won’t hate them (nor love them), but they’re made out of atoms which it can use for more productive things. The AI runs on a different timescale than humans; by the time human neurons think the words “someone should do something about this situation” it’s already too late.9

- Steve Omohundro, computer scientist and entrepreneur, “Autonomous technology and the greater human good”, Journal of Experimental & Theoretical Artificial Intelligence, April 2014:

Harmful systems might seem as if they’d be harder to design or less powerful than safe systems. Unfortunately, the opposite is true. Some simple utility functions can be extremely harmful. Here are 6 potential ethical categories of harm (listed from bad to worse):

1. Sloppy: systems that were intended to be safe, but weren’t designed correctly.

2. Simplistic: systems that weren’t intended to be harmful, but have harmful unintended consequences.

3. Greedy: systems whose utility functions reward them for controlling as much matter and free energy in the universe as possible.

4. Destructive: systems whose utility functions reward them for using as much free energy as possible as fast as possible.

5. Murderous: systems whose utility functions reward them for destroying other systems.

6. Sadistic: systems whose utility functions reward them when they thwart goals of other systems, gaining utility as their adversaries’ utility fades.

Once powerful autonomous systems are common, modifying one into a harmful form would be simple. It’s important to develop strategies for monitoring and controlling this.10

In the “Unspecified fears” group:

- Elon Musk, futurist (founded PayPal, Tesla and SpaceX), Edge November 2014:

The pace of progress in [strong] AI is incredibly fast, close to exponential. The risk of something seriously dangerous happening is in the 5-year timeframe, 10 years at most. I’m not alone in thinking we should be worried. The leading AI companies recognise the danger, but believe they can shape and control any digital superintelligences, preventing bad ones from escaping into the Internet; [however,] that remains to be seen.11

- Elon Musk, Twitter, August 2014:

Aug 2: AI: potentially more dangerous than nukes.12

Aug 3: Hope we’re not just the biological boot loader for digital superintelligence. Unfortunately, that is increasingly probable.13

- Elon Musk, “I’m Worried About a Terminator-Like Scenario Erupting From Artificial Intelligence”, Business Insider Australia (from CNBC’s “Closing Bell”), June 2014:

I was an investor in DeepMind before Google acquired it and [now am an investor in] Vicarious [along with Mark Zuckerburg, Jeff Bezos and Ashton Kutcher, among others]. It’s not from the standpoint of actually trying to make any investment return [but] to keep an eye on what’s going on with artificial intelligence. I think there’s potentially a dangerous outcome there. You have to be careful.14

- Noah Smith, finance professor, SUNY Stony Brook, “The Slackularity”, Noahpinion, February 2014:

If an AI is smart enough to create a smarter AI, it may be smart enough to understand and modify its own mind. That means it’ll be able to modify its own desires. And if it gets that power, its motivations will become unpredictable, from our point of view, because small initial “meta-desires” could cause its personality to change in highly nonlinear ways. If we do succeed in inventing autonomous, free-thinking, self-aware, hyper-intelligent beings, they’ll do the really smart thing, and reprogram themselves to be Mountain Dew-guzzling Dungeons & Dragons-playing slackers.15 [Wait, what?]

Should We Be Worried?

Are you scared now?

If the answer is “yes”, hang on — your fear may not be necessary.

The sceptics’ camp against AI taking over the world has also grown. Its members include Jaron Lanier, the virtual-reality pioneer, who now works for Microsoft Research; Mitch Kapor, the early PC-software entrepreneur who co-founded the Electronic Frontier and Mozilla foundations (he’s bet $10,000 that no computer will pass for human before at least 2030); and Microsoft co-founder Paul Allen, who has an eponymous neuroscience-research institute (Allen says most of the fear-mongers have vastly underestimated the brain’s complexity). Marc Andreessen, a creator of the original Netscape browser and now a Silicon Valley venture capitalist, addressed the subject in one of his recent tweet-storms: “I don’t see any reason to believe there will be some jump where all of a sudden they have super-human intelligence,” he tweeted, and, “The singularity AI stuff is all just a massive hand-wave, 'At some point magic happens’? Nobody has any theory for what this is.”16

So what are the bottlenecks?

The symbol grounding problem is related to the problem of how words (symbols) get their meanings, and hence to the problem of what meaning itself really is. Meaning is in turn related to consciousness, or how it is that mental states are meaningful.

According to a widely held theory of cognition called computationalism, thinking is just a form of computation, but that in turn is just formal symbol manipulation: symbols are manipulated according to rules based on the symbols’ shapes, not their meanings. How are symbols (for example, the words in our heads) connected to the things they refer to?

If an AI has both a symbol system and a sensorimotor system that reliably connects its internal symbols to the external objects they refer to in order to interact with them, then would the symbols have “meaning” to the AI? This is something that cognitive science itself can’t determine, or explain. For one thing, computational theory leaves open the idea that certain mental states, such as pain or depression, may not be representational and therefore may not be suitable for a computational treatment. These non-representational mental states are known as qualia.

Unfortunately, natural language — which may not literally be the language of thought, but which any human-level AI programme has to be able to handle — also can’t be treated as formal symbols. To give a simple example, “day” sometimes mean “day and night” and sometimes means “day as opposed to night” depending on context. Or take the following pair of sentences:

The committee denied the group a parade permit because they advocated violence.

The committee denied the group a parade permit because they feared violence.

The sentences differ by only a single word, but it’s one which apparently reverses the meaning. Except it doesn’t — they still mean essentially the same thing. Disambiguating these types of sentences can’t be done without extensive — potentially unlimited — knowledge of the real world. (Try communicating only in pictographs for a couple of days and the problem becomes clearer.)

Philosophical discussions of consciousness tend to centre on 4 things which (for the time being, at least) set conscious humans apart from “mere” computers. First, real subjective sensations (qualia, feelings that so far only living things experience, such as joy or want); second, in various forms, real understanding or meaning (intentionality) that allows us to talk “about” things that may not even exist; third, moral responsibility (we are responsible for the acts we consciously chose — that we meant to take place and whose consequences we considered — and not for those instances where we couldn’t have behaved otherwise); and fourth, perception of relevance (the “framing problem” of which context to apply in this instance).

Attempts to produce a conscious robot or computer programme are attempts to transfer moral responsibility from the engineers or the programmers to their creation. If a way could be found to do this, it would surely have enormous practical implications.

There are 3 ways one can look at free will:

- that it is, in fact, an illusion:

- that it is real but mysteriously at odds with physics; or

- that the two are compatible.

Those who believe that the self and/or consciousness are illusory would most naturally find themselves sceptics about free will; those who believe in the straightforward reality of free will would be inclined to see a deep mystery (or at least, something outside the scope of current physics) in consciousness. If we say that free will and physics are compatible, we must accept that in some strict sense all events are predetermined, but consciousness allows us to equivocate by modifying our interpretation: “I did this and I meant to, but I didn’t mean for that to happen.”

Whether or not these things are ultimately attainable by computers, they’re profoundly mysterious even in human form.17

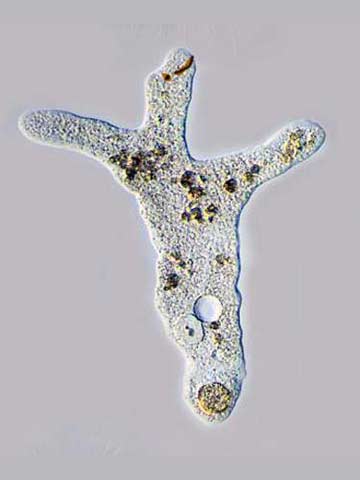

It’s striking that even the simplest forms of life — amoebae, for example — exhibit an intelligence, an autonomy, an originality, that far outstrips even the most powerful computers. A single cell has a life story; it turns the medium in which it finds itself into an environment and organises that environment into a place of value. It seeks nourishment. It makes itself — and in making itself it introduces meaning into the universe. Admittedly, unicellular organisms are not very bright — but they’re smarter than clocks and even supercomputers — for they possess the rudimentary beginnings of that driven, active, compelling engagement that we call life and that we call mind. Machines don’t make information — they’re given it to process. But the amoeba does have information — it gathers it and even manufactures it.

Worry about the singularity only after someone has made a machine that exhibits the agency and awareness of an amoeba.

If it’s the case that an execution of a computer programme instantiates what it feels like to be human (experiencing pain, smelling the beautiful perfume of a long-lost lover)

then phenomenal consciousness must be everywhere — in a cup of tea, in the chair you’re sitting on.

— Mark Bishop, professor of cognitive computing, “Dancing with Pixies”, 2002

There is another sense, though, in which we hit the singularity long ago. We don’t make smart machines and we don’t make machines likely to be smarter than us. But we do make ourselves smarter and more flexible and more capable through our machines and other technologies. Clothing, language, pictures, writing, the abacus and so on — each of these has not only expanded us but has altered us, making us into something we weren’t before. And this process of making and remaking, or extending and transforming, is as old as our species.

In a sense, then, we’ve always been trans-human, more than human, or more than merely biological. Or rather, our biology has always been technology-enriched and more than merely flesh and blood. We carry on the process that begins with the amoeba. Watson the computer may have a pseudo-intelligence but each human has an intelligence that is both real and novel.18 To be human is to be “a” human, a specific person with a life history, idiosyncrasy, and point of view.19

Disaster scenarios are cheap to play out in the probability-free zone of our imaginations, and they can always find a worried, techno-phobic, or morbidly fascinated audience. In the past we were chastened from trying to know or do too much by the fables of Adam and Eve, Prometheus, Pandora, the Golem, Faust, the Sorcerer’s Apprentice, Pinocchio, Frankenstein, and HAL. More recently we’ve been kept awake by the population bomb, polywater, resource depletion, Andromeda strains, suitcase nukes, the Y2K bug, and engulfment by nano-technological grey goo.

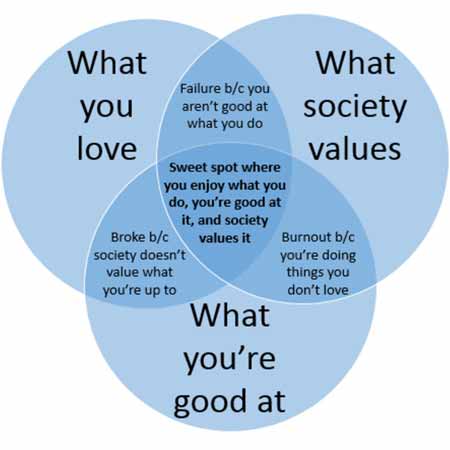

The problem with AI dystopias is that they project a parochial alpha-male psychology onto the concept of intelligence. Even if we did have superhumanly intelligent robots, why would they want to depose their masters, massacre bystanders, or take over the world? Intelligence is the ability to deploy novel means to attain a goal, but the goals are extraneous to the intelligence itself: being smart is not the same as wanting something. History does turn up the occasional megalomaniacal despot or psychopathic serial killer, but these are products of a history of natural selection shaping testosterone-sensitive circuits in a certain species of primate, not an inevitable feature of intelligent systems. It’s telling that many of our techno-prophets can’t entertain the possibility that artificial intelligence will naturally develop fully capable of solving problems, but with no burning desire to annihilate innocents or dominate the civilisation.20

What makes Elon Musk so afraid? Not what you might think. He’s an unconventional marketer of the highest calibre. Consider the Hyperloop media extravaganza from August 2013 — it looked as if people would soon be riding super-fast, solar-powered intercity trains. That may in fact never happen, but the performance forged a strong link between Musk and tomorrow’s transportation technology. That’s useful for selling expensive futuristic cars, especially when a company as mundane as Toyota has more promising technology in the field. Or take Musk’s announced intention to die on Mars — a plug for SpaceX, the entrepreneur’s rocket company that needs government orders and subsidies to stay afloat. Musk is good at hype, and his new investment, Vicarious (which focusses on artificial brains), constantly needs more funding. The latest big investment, $12 million, came earlier this month from the Swiss electrical engineering company ABB.

Vicarious may at some point get quite good at recognising physical objects, but it’s a big leap of faith to imagine its system telling a biker in 2019, “I need your clothes, your boots and your motorcycle.” It’s much easier to imagine it as an expensive acquisition target, more lucrative for investors even than DeepMind. That’s why admiration for Musk as a pitchman outweighs any fear that artificial intelligence will soon take away jobs.21

Overseas there are now university institutes devoted to worrying about the downsides of the Singularity: at Oxford, the Future of Humanity Institute; at Cambridge, the Centre for the Study of Existential Risk (co-founded by Tallinn of Skype). In America is Singularity University (SU), the go-go postgraduate institute founded by (author and Singularity theorist) Ray Kurzweil and (MIT- and Harvard-educated tech entrepreneur) Peter Diamandis, creator of the X Prizes (multi-million-dollar contests to promote super-efficient cars, private moon landings, and the like). SU is based at NASA’s Ames Research Centre in Silicon Valley, funded by a digital-industrial-complex pantheon: Cisco, Genentech, Nokia, GE, and Google. In its 6 years of existence, many hundreds of executives, entrepreneurs, and investors — what Diamandis calls “folks who have done a $100 million exit and are trying to decide what to do next” — have paid $12,000 to spend a week sitting in moulded-plastic chairs on rollers (futuristic circa 1970s) listening to Kurzweil and Diamandis and others explain and evangelize. Thousands of young postgrads from all over the world apply for the 80 slots in the annual 10-week summer sessions that train them to create Singularity-ready enterprises.16

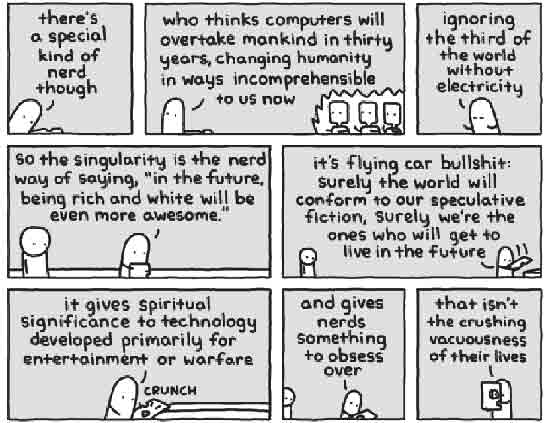

The mythology around AI is a re-creation of some traditional ideas about religion, but applied to the technical world. All potential damages essentially mirror damages that religion has brought to science in the past: anticipation of a threshold, an end of days, a new kind of personhood — if strong AI were to come into existence, it would soon gain supreme power, exceeding people. This is sometimes called the Singularity, or superintelligence, or all sorts of different terms in different periods. It’s similar to a superstitious idea about divinity, that there’s an entity that will run the world, that maybe you can pray to, maybe you can influence, but you should be in terrified awe of it. That particular idea is distorting our relationship to technology and it’s been dysfunctional in human history in exactly the same way — only the words have changed

It’s often been the case in organised religion’s history that people are disempowered precisely to serve what were perceived to be the needs of some deity or another, when in fact what they were doing was supporting an elite class that functioned as the “priesthood” for that deity. That looks a lot like the new digital economy, where there are (natural language) translators and many others who contribute to the corporations that facilitate the operation of data schemes, contributing mostly to the fortunes of whoever runs the top computers. The new elite might say, “It’s not us, they’re helping develop AI.” This is similar to someone in history saying, “Oh, build these pyramids, it’s in the service of the deity,” but, on the ground, it’s really in the service of an elite. The effect of the new [religious] idea of AI is a lot like the economic effect of the old idea of religion.22

Presumably, a proper deity would need to be conscious. Otherwise, how would each human potentially be recognised and helped? (Consciousness is the defining essence of humanity, so maybe a strong AI will have multiple consciousnesses, many personalities, to suit the myriad situations in which it might find itself? Like God supposedly does.)23

Why should our early 21st century stab at a computational AI fully encompasses natural intelligence, which took communities of cells more 4 billion years to develop?24 Because we’re smarter than cells, of course. As a conscious AI would be smarter than us. But doesn’t consciousness require life? Does an AI need to be alive? If it does, perhaps the focus of our efforts should first be to create artificial life and then to create artificial intelligence — otherwise, we may never arrive at our desired destination.

What CAN We Expect and When?

“Civilisation 2030: The near future for law firms” by Jomati Consultants foresees a world in which population growth is actually slowing, with “peak humanity” occurring as early as 2055, and ageing populations bringing a growth in demand for legal work on issues affecting older people. The report’s focus on the future of work contains disturbing findings for lawyers. Its main proposition is that [weak] AI is already close in 2014. “It is no longer unrealistic to consider that workplace robots and their AI processing systems could reach the point of general production by 2030. By this time, 'bots’ could be doing 'low-level knowledge economy work’ and soon much more. Eventually each bot would be able to do the work of a dozen low-level associates. They would not get tired. They would not seek advancement. They would not ask for pay rises. Process legal work would rapidly descend in cost.”25

We’re a long way from a working artificial brain. Given our limited understanding of biological neurons and learning processes, the connectivity scalability issues, and the substantial computing power required to emulate neuronal function, one may understandably be sceptical that a group of interconnected artificial neurons would possibly behave in a fashion similar to the simplest animal brains, let alone display intelligence.

It seems that there isn’t one good approach to all of the problems being encountered. In the near term, software is easier to experiment with than hardware, and connectivity and structural plasticity are practical to attain in partial brain emulations. Experiments with software may produce more progress on requirements for neural modelling and experiments with analogue hardware might show promise for future whole brain emulation. The most promising approaches depend on timeframe:

1. In the short term, scalability to the size of a mammalian brain is not practical, but software simulation seems most promising for emulating and understanding small networks of neurons to experiment with learning algorithms.

2. Loosely-coupled processors seems most likely to yield neural emulation on the scale of the entire brain in the medium term. The result might or might not behave like a biological brain, given the simplifying assumptions.

3. In the long term, if a solution such as DNA-guided self-assembly is developed to allow self-guided wiring and re-wiring of dendrites and axons, such an analogue solution seems the only approach that [could ever] achieve bio-realism on a large scale.

Neuroscientists are uncovering additional mechanisms and aspects of neural behaviour daily, and neural models for artificial brains may become significantly more complex with future discoveries. However, even limited and simplified artificial brains provide a test bed for research into learning and memory. Substantial progress will be made through new technologies and ideas over the coming decades, providing considerable value in signal processing and pattern recognition; experiments with neural networks may yield insights into learning and other aspects of the brain. And independent of any value to neuroscience, efforts in artificial brain emulation provide new computer architectures. If the goal is better artificial intelligence or more efficient computer architectures rather than bio-realistic brain emulation, then there’s value in continued research despite pessimism about current timeframes, goals, and technologies.26 (Just don’t expect miracles.)

David Deutsch, Oxford physicist, said: “No brain on Earth is yet close to knowing what brains do. The enterprise of achieving it artificially — the field of AI — has made no progress whatever during the entire 6 decades of its existence.” He adds that he thinks machines that think like people will happen someday — but will we ever have machines that feel emotions like people?

The founders of AI, and those who work on AI, still want to make computers do things they can’t now do in the hope that something will be learned from this effort or that something will have been created that’s of use. A computer that can hold an intelligent conversation with you would be potentially useful. Is it intelligent? Certainly not at this time, though it may be said to be knowledgeable. But the programme has no self-knowledge. It doesn’t know what it is saying and doesn’t know what it knows. The fact that we have stuck ourselves with this silly idea of strong AI causes people to mis-perceive the real issues. The field should be renamed “the attempt to get computers to do really cool stuff” but of course it won’t be. You’ll never have a friendly household robot with whom you can have deep meaningful conversations — at least not in your lifetime.

Humans are born with individual personalities and their own set of wants and needs and they express them early on. No computer starts out knowing nothing and gradually improves by interacting with people. We always kick that Idea around when we talk about AI, but no one ever does it because it really isn’t possible. Nor should it be the goal of the field formerly known as AI. The goal should be figuring out what great stuff people do and seeing if machines can do bits and pieces of that. There really is no need to create artificial humans anyway. We have enough real ones already.27

Maybe the only significant difference between a really smart simulation and a human being is the noise they make when you punch them.

— Terry Pratchett, The Long Earth

Sadly, no for now.

Endnotes

1 https://diogenesii.files.wordpress.com/2012/06/intelligent-machinery-a-heretical-theory.pdf

2 http://online.wsj.com/articles/automation-makes-us-dumb-1416589342

3 http://archive.wired.com/wired/archive/8.04/joy.html and http://en.wikipedia.org/wiki/Why_The_Future_Doesn%27t_Need_Us

4 http://consc.net/papers/singularity.pdf

5 http://webdocs.cs.ualberta.ca/~sutton/Good65ultraintelligent.pdf

6 http://aeon.co/magazine/philosophy/ross-andersen-human-extinction/

8 http://www.nickbostrom.com/existential/risks.html

9 https://intelligence.org/files/AIPosNegFactor.pdf

10 http://www.tandfonline.com/doi/pdf/10.1080/0952813X.2014.895111

11 https://www.reddit.com/r/Futurology/comments/2mh8tn/elon_musks_deleted_edge_comment_from_yesterday_on/ from http://edge.org/conversation/the-myth-of-ai

12 https://twitter.com/elonmusk/status/495759307346952192

13 https://twitter.com/elonmusk/status/496012177103663104

14 http://www.businessinsider.com.au/musk-on-artificial-intelligence-2014-6

15 http://noahpinionblog.blogspot.co.nz/2014/02/the-slackularity.html

16 Kurt Anderson, “Enthusiasts and Sceptics Debate Artificial Intelligence”, Vanity Fair, 26 November 2014, http://www.vanityfair.com/culture/2014/11/artificial-intelligence-singularity-theory

17 Peter Hankins, Conscious Entities, http://www.consciousentities.com/whole.htm

18 Alva Noë, philosopher at the University of California at Berkeley (where he writes and teaches about perception, consciousness and art), “Artificial Intelligence, Really, Is Pseudo-Intelligence”, 13.7 Cosmos & Culture from NPR, 21 November 2014, http://www.npr.org/blogs/13.7/2014/11/21/365753466/artificial-intelligence-really-is-pseudo-intelligence

19 Brian Christian, The Most Human Human: What Talking with Computers Teaches Us about What It Means to Be Alive, http://www.goodreads.com/quotes/tag/artificial-intelligence

20 Steven Pinker, Harvard psychology professor, “The Myth of AI”, Edge, http://edge.org/conversation/the-myth-of-ai

21 Leonid Bershidsky, author, editor, and publisher, “Musk’s Artificial Intelligence Warnings Are Hype”, Bloomberg View, 19 November 2014, http://www.bloombergview.com/articles/2014-11-19/musks-artificial-intelligence-warnings-are-hype

22 Jason Lanier, computer scientist and musician, “The Myth Of AI”, Edge, http://edge.org/conversation/the-myth-of-ai

23 Jason Lanier, from Who Owns the Future?: “Enthusiasts and Sceptics Debate Artificial Intelligence”, Vanity Fair, 26 November 2014, http://www.vanityfair.com/culture/2014/11/artificial-intelligence-singularity-theory

24 Lee Smolin, physicist, “The Myth of AI”, Edge, http://edge.org/conversation/the-myth-of-ai

25 Dan Bindman, “Report: artificial intelligence will cause 'structural collapse’ of law firms by 2030”, Legal Futures, 1 December 2014, http://www.legalfutures.co.uk/latest-news/report-ai-will-transform-legal-world

26 Rick Cattell and Alice Parker, “Challenges for Brain Emulation: Why is it so Difficult?”, Natural Intelligence: the INNS Magazine Volume 1, Issue 3, Spring/Summer 2012, http://cnsl.kaist.ac.kr/ni-inns/V01N03/NI_Vol1I3_SS2012_17.pdf

27 Roger Schank, psychologist and computer scientist, “2014: What Scientific Idea Is Ready for Retirement? Artificial Intelligence”, Edge, 30 November 2014, https://edge.org/response-detail/25406

The Distillery

Looking Grey

- Steven Koonin is a computational physicist who has studied climate change extensively. He says there’s no doubt that our climate is changing (as it always has) nor that it’s affected by humans. But the crucial, unsettled scientific question for policy is, “How will the climate change over the next century under both natural and human influences?” Even though humans can have serious consequences for climate, those consequences are physically small in relation to the climate system as a whole. For example, human additions to carbon dioxide in the atmosphere by the middle of the 21st century are expected to directly shift the atmosphere’s natural greenhouse effect by only 1-2%. Since the climate system is highly variable on its own, that smallness sets a very high bar for confidently projecting the consequences of human influences.

A second challenge is that the oceans have a much larger impact, but we don’t know exactly what that impact will be.

Another challenge arises from feedbacks that can dramatically amplify or mute the climate’s response to these human and natural influences. Dr Koonin says that climate models give widely varying descriptions of the climate’s inner workings, disagreeing so markedly that no more than one of them can be right. They don’t have “minor” issues to be “cleaned up” by further research. Rather, they are deficiencies that erode confidence in the computer projections. Work to resolve these shortcomings in climate models should be among the top priorities for climate research. These are fundamental challenges to our understanding of human impacts on the climate, and shouldn’t be dismissed with the mantra that “climate science is settled.” Any serious discussion of the changing climate must begin by acknowledging not only the scientific certainties but also the uncertainties in projecting the future. Recognising those limits, rather than ignoring them, will lead to a more sober and ultimately more productive discussion of climate change and climate policies.

- Using nothing but wire, sculptor Clive Madison creates tangled trees that grow from wooden bases into dense clusters of leaves and branches. Each piece is made by hand without glue or solder, using single strands of wire that start at the base and terminate at the top. See this website for more.

Doppelgängers

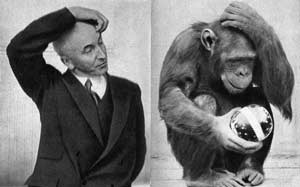

What Does It Mean to Be Smart?

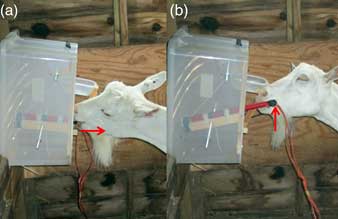

- Not only can domesticated goats learn a relatively complicated task very quickly, they show exceptional recall of that task almost a year later. What some might see as idiocy or vacancy in your average goat, others see as curiosity or rumination. And a new study leans toward the “they’re really clever” theory: Researchers tried to teach 12 goats at the Buttercups Sanctuary for Goats how to use a 2-step process to open a box and get a food reward. The goats had to first use their teeth to pull down a lever, and then nudge the lever back up in order to release the treat — and 9 of the 12 succeeded, in an average of only 8 tries.

- Sharks have personalities, with some being shy and solitary and others being more outgoing. UK researchers found that some sharks have gregarious personalities (forming strong social connections) while others are more solitary (preferring to remain inconspicuous). Researchers recorded the social interactions of 10 groups of juvenile catsharks in captivity in 3 different habitat types, varying structural complexity. Findings showed individual sharks possessed social personalities, with social network positions repeated through time and across different habitats — some were natural extroverts and some introverts.

- Pigs were asked to perform duties in which they had to respond to visual cues. They were given odour quizzes, correctly picking out, for example, spearmint, from an array of other smells that included mint and peppermint. Some studies have shown that scent is so important to a pig that if you cover up a part of its cheek, it has trouble recognising others because that’s where each emits a personal pheromone. These pigs were pampered and stimulated, living in large indoor runs and having lots of toys to play with to break up the monotony of the day. The pigs were extremely clean, even housebreaking themselves. Amazingly, at the end of a play session each put its own toys away in a big tub. They give eye contact and are very tactile, mouthing the boots and clothes of their human caretakers. Is it unhelpful to know how clever pigs are since they are bred to be eaten? I find it so.

In Flight

- Gannets have no external nostrils — these are located inside their mouths. Moreover, they have air sacs in their faces and chests under their skin which act like bubble wrap, cushioning water’s impact. Their eyes are positioned far enough forward on their faces to give them binocular vision, allowing them to judge distances more accurately.

- The roseate spoonbill is a gregarious wading bird of the ibis and spoonbill family. Like with the American flamingo, the pink colour is diet-derived, consisting of the carotenoid pigment canthaxanthin. Another carotenoid, astaxanthin, can also be found deposited in flight and body feathers. Roseate spoonbills have been known to live for 16 years.

- The great blue heron is a large wading bird common near the shores of open water and in wetlands over most of North and Central America as well as the Caribbean and the Galápagos Islands. It is a rare vagrant to Europe, with sightings in Spain, the Azores, England and the Netherlands. Their call is a harsh croak. Nonvocal sounds include a loud bill snap, which males use to attract a female or to defend a nest site and which females use in response to bachelor males or within breeding pairs.

- The bald eagle is a bird of prey found in North America. Its range includes most of Canada and Alaska, all of the contiguous United States, and northern Mexico. It’s found near large bodies of open water with an abundant food supply and old-growth trees for nesting. It builds the largest nest of any North American bird and the largest tree nests ever recorded for any animal species, up to 4 metres (13 feet) deep, 2.5 metres (8.2 feet) wide, and 1 metric ton (1.1 short tons) in weight. Sexual maturity is attained at the age of 4-5 years.

- The hen harrier or northern harrier (in the Americas) is a bird of prey that breeds throughout the northern parts of the northern hemisphere in Canada and the northernmost USA, and in northern Eurasia. It migrates to more southerly areas in winter. Little information is available on longevity. The longest-lived bird known died at 16 years and 5 months. However, adults rarely live more than 8 years. Early mortality mainly results from predation.

- The bald eagle is an opportunistic feeder which subsists mainly on fish, which it swoops down and snatches from the water with its talons. It’s a powerful flier, and soars on thermal convection currents, reaching speeds of 56–70 kilometres per hour (35–43 miles per hour) when gliding and flapping, and about 48 kilometres per hour (30 miles per hour) while carrying fish. Its dive speed is between 120–160 kilometres per hour (75–99 miles per hour), though it seldom dives vertically. The average lifespan of bald eagles in the wild is around 20 years, with the oldest confirmed one having been 28 years of age.

- Seagull food fight at St Augustine Beach, Florida. The upright bird may be a laughing gull and perhaps the upside-down one is a black noddy? (I’m no ornithologist — both guesses may be wrong.)

- The snow goose also known as the blue goose, is a North American species. This goose breeds north of the timberline in Greenland, Canada, Alaska, and the northeastern tip of Siberia, and winters in warm parts of North America from southwestern British Columbia through parts of the US to Mexico. It’s a rare vagrant to Europe, but snow geese are regular visitors to the British Isles.

- Outside of the nesting season, snow geese usually feed in flocks. In winter they feed on left-over grain in fields. They migrate in large flocks, often visiting traditional stopover habitats in spectacular numbers. Snow geese frequently travel and feed alongside greater white-fronted geese; in contrast, the two tend to avoid travelling and feeding alongside Canada geese, which are often heavier birds.

Not Ordinary

- Imagine a world so crowded that the only way you can hope to get some private space is by encasing yourself in a fabric cocoon. That’s the premise behind Nutshell, a collapsible privacy shield for people suffering from social claustrophobia who just need a few minutes of peace.

- Dialysis machines are needed by patients with renal (kidney) failure to clean their blood of bodily wastes (almost 40,000 people in the US alone). The US Food & Drug Administration’s (FDA) fast-track programme, Innovation Pathway, streamlines the approval process for breakthrough technologies. In 2012, the “Wearable Artificial Kidney” (WAK) was awarded fast-track status. Human trials are scheduled for 2017. The current prototype weighs just 10 pounds and can be carried about the waist of the patient. As time goes on and these devices become smaller and smaller, implanting them is expected to be the norm.

- Isaac Asimov, asked to write the novel from the Fantastic Voyage script, declared that the script was full of plot holes, and received permission to write the book the way he wanted. The novel came out first because he wrote quickly and because of delays in filming.

- Have your cake and eat it, too.

- As if internationally renowned fashion model Lydia Hearst (she of the Hearst family publishing fame and fortune) needed anything else to add to her diverse résumé — she can already claim model, actress, writer and blogger — she has joined supermodel Naomi Campbell as one of the supermodel coaches on season two of modelling competition show The Face. (Oh — did I forget to say she apparently can unzip her torso?)

- Artist Ben Young works with laminated clear float glass atop cast concrete bases to create cross-section views of ocean waves that look somewhat like patterns in topographical charts. The self-taught artist is currently based in Sydney but was raised in Waihi Beach, New Zealand, where the local landscape and surroundings greatly inspired his art. His stuff is for sale at Kirra Galleries.

- Built in 1931, this Art Deco railroad underpass in Birmingham, Alabama is a gateway between downtown and a new urban space called Railroad Park. In recent years the dark tunnel had deteriorated into an unwelcoming and potentially dangerous area, so the city hired sculptor and public artist Bill FitzGibbons to create a lighting solution that would encourage more pedestrian traffic. Titled LightRails, the installation is composed of a network of computer-controlled LEDs that that form various lighting patterns in the underpass.

- This illuminated bike path is in Nuenen, Netherlands. The swirling patterns used on the kilometre-long Van Gogh-Roosegaarde Bicycle Path were inspired by the painting Starry Night (Van Gogh lived in Nuenen from 1883 to 1885), and it’s lit at night by both special paint that charges in daylight and embedded LEDs that are powered by a nearby solar array. It makes riding at night a pleasure.

Animal, Vegetable, and Mineral

- This deep ocean worm lives on the edge of a hot “black smoker” vent on the floor of the Atlantic Ocean. The record for heat tolerance is currently held by these organisms, called hyperthermophile methanogens, which thrive around the edges of the hydrothermal vents in the deep sea. Some of these organisms can grow at temperatures of up to 122°C (252°F). Most researchers believe that around 150°C (302°F) is the theoretical cut-off point for life. At that temperature proteins fall apart and chemical reactions cannot occur — a quirk of the biochemistry that life on earth (so far as we know) abides by. This means that microorganisms can thrive around hydrothermal vents, but not directly within them, where temperatures can reach up to 464°C (867°F).

- The sun sets over a rimu forest near Haast, New Zealand. Go off the beaten track, for that way lies enchantment.

- This installation at London’s Heathrow spritzes out different scents for different places around the world. Really? A country has a single smell? Who knew? According to Heathrow, about 47% of British travellers say their trip starts not at their destination, but at the airport. The globe’s scents are meant to “take passengers on a sensory journey before even setting foot on their flights.” The countries represented include South Africa, Japan, Thailand, China and Brazil. Previously, the airport sprayed the scent of pine needles to relax passengers.

Leaves Fall. So Does Rain.

- Aerial view of Henderson Field 24 September 2012 — just a hint of autumn.

- Aerial view of Henderson Field 8 November 2013 — full-blown autumn.

- Aerial view of nearby neighbourhood also 8 November 2013 — autumn rampant.

- Painter’s Hill Beach storm. These clouds are impressive.

- Summer Haven Beach storm. Same storm, further north.

- Mammatus clouds at sunset.

Man-Made

- This is underneath the old Hoover-Mason Trestle. It may become a park in the future, but for now, it lies abandoned.

- In 1996, Chatham became the home of Commercial Alcohols (now Greenfield Specialty Alcohols), which is the largest ethanol plant in Canada, and one of the largest in the world. It produces ethanol for industrial, medical, and recreational uses. In January 2005, the plant was named as one of Canada’s 50 best-managed companies. There are plans to double the size of the current Chatham facility.

- Located about 70 miles north of Seattle on the picturesque Puget Sound, the Tesoro March Point Anacortes Refinery has a total crude-oil capacity of 120,000 barrels per day. The refinery primarily supplies gasoline, jet fuel and diesel fuel to markets in Washington and Oregon, and manufactures heavy fuel oils, liquefied petroleum gas and asphalt. It receives crude feedstock via pipeline from Canada, by rail from North Dakota and the central US and by tanker from Alaska and foreign sources. An explosion and fire led to the fatal injury of 7 employees when a nearly 40-year-old heat exchanger catastrophically failed during a maintenance operation.

- Few aircraft are as well-known or were so widely used for so long as the C-47 Skytrain, which American soldiers nicknamed the “Gooney Bird.” (In Britain, the plane was called the Dakota.) The aircraft was adapted from the DC-3 commercial airliner which first appeared in 1936. The first C-47s were ordered in 1940 and by the end of WWII, over 10,000 had been procured for the USAAF and US Navy. They carried personnel and cargo, and in a combat role, towed troop-carrying gliders and dropped paratroops into enemy territory. The most widely used military transport in WWII, the C-47 also saw service with the US Navy as the R4D and with the RAF as the Dakota. This plane is on display somewhere in Delaware. It’s possibly a smaller-than-lifesized model.

- First experimental house completed near Taos, New Mexico using empty steel beer and soft drink cans. The house was built using curved walls because they have more strength, resulting in pie-shaped interior rooms. There is a lawn on the roof below the overhang at the top of the structure. Re-cycled paper pulp is used to cover the ceiling of the interior. Later homes were built without curved walls after the designer found the cans would support much more weight than they would have to bear. University tests later substantiated this finding.

- Newer beer can house near Taos uses aluminium cans. Are the walls just as strong? Stronger? That information wasn’t provided.

Positively Reptilian

- The Pantanal is a natural region encompassing the world’s largest tropical wetland area. It’s located mostly within the Brazilian state of Mato Grosso do Sul, but extends into Mato Grosso and portions of Bolivia and Paraguay. It sprawls over an area estimated at between 140,000 and 195,000 square kilometres (54,000-75,000 square miles). The Yacare caiman is a species of caiman found in central South America, including northeastern Argentina, Uruguay, southeastern Peru, eastern Bolivia, central/southwest Brazil, and the rivers of Paraguay. About 10 million individual Yacare caimans exist within the Brazilian pantanal, representing what is quite possibly the largest single crocodilian population on Earth. Are caimans alligators or crocodiles? According to Wikipedia, they are “alligatorid crocodylians”.

- A brown vine snake hunting at night at Mt Irvine. The snake is found from southern Arizona in the US (where it is called the pike-headed tree snake), through Mexico, to northern South America and Trinidad and Tobago. This is an extremely slender snake that reaches up to 1.9 metres (6.2 feet) in length. Its colour may vary from grey to brown with a yellow underside. When threatened, it sometimes releases foul-smelling secretions from its vent. It feeds mainly on lizards, but also eats frogs and birds. It is mildly venomous.

- The Komodo dragon, also known as the Komodo monitor, is a large species of lizard found in the Indonesian islands of Komodo, Rinca, Flores, Gili Motang, and Padar. A member of the monitor lizard family, it is the largest living species of lizard, growing to a maximum length of 3 metres (10 feet) in rare cases and weighing up to approximately 70 kilograms (150 pounds), though captive specimens often weigh more. Komodo dragons hunt and ambush prey including invertebrates, birds, and mammals. It has been claimed that they have a venomous bite; there are two glands in the lower jaw which secrete several toxic proteins. The biological significance of these proteins is disputed, but the glands have been shown to secrete an anticoagulant. Komodo dragon group behaviour in hunting is exceptional in the reptile world. Their diet mainly consists of deer, though they also eat considerable amounts of carrion and occasionally attack humans. Even seemingly docile dragons may become unpredictably aggressive, especially when the animal’s territory is invaded by someone unfamiliar. In June 2001, a Komodo dragon seriously injured Phil Bronstein, the then-husband of actress Sharon Stone, when he entered its enclosure at the Los Angeles Zoo after being invited in by its keeper. Bronstein was bitten on his bare foot, as the keeper had told him to take off his white shoes and socks, which the keeper stated could potentially excite the Komodo dragon as they were the same colour as the white rats the zoo fed it. Although Bronstein escaped, he needed to have several tendons in his foot reattached surgically.

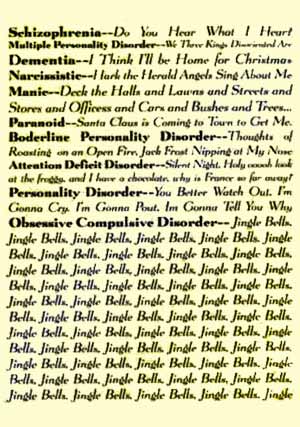

Moving on to Another Holiday

- Hope your Christmas is sane.

- Secular greetings.

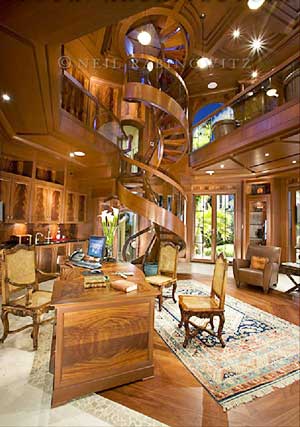

- This house on Emerald Cay, Turks & Caicos, Chalk Sound Drive, has 30,000 square feet, 8 bedrooms, 10 baths, a 6,000-bottle wine cellar, home theatre, 3-storey library, a fitness room, 2 swimming pools with a waterfall, courts for playing volleyball and tennis, 2 boat slips, a BBQ pavilion, guest boat house, caretaker’s cottage, and 2 private beaches. I’d ask some reader to please buy it for me for Christmas except (sadly) it’s already been sold — for only US$45 million.

On an Up Beat: Getting Ready to Depart

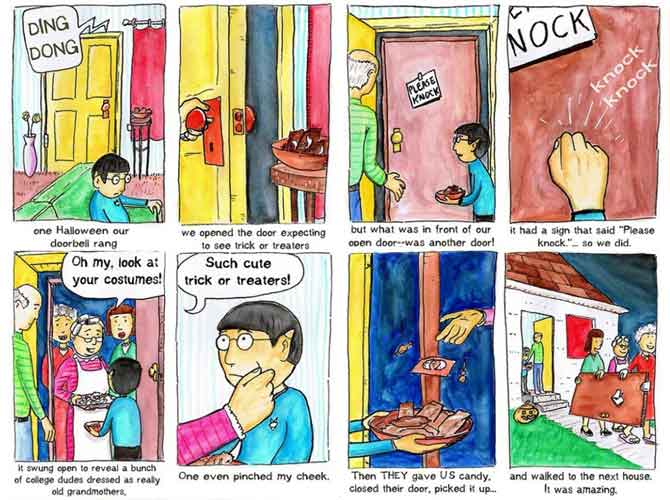

Artist Nick Kim has an unusual sense of humour.

One and Done

This is a single-seat, open cockpit, open-wheel racing car with substantial front and rear wings, and an engine positioned behind the driver, intended to be used in competition at Formula One racing events. F1 cars cost an average of US$14-15 million.

- Engine – Their engines idle around 5,000 (or more) revolutions per minute (rpm). At nearly 20,000 rpm, the titanium connecting rods of the pistons in the engine actually stretch slightly. The car can go from zero to 100 miles per hour (mph) and back to zero in about 5 seconds. There’s such an aerodynamic vacuum created behind an F1 that a car following closely — drafting — will eventually overheat its engine due to inadequate airflow. (This is called breathing “dirty air.”) An F1 engine has such small tolerances between components that they must travel connected to a compressed air supply to ensure they remain correctly stressed when the engine is off. The car can’t be started when cold without causing catastrophic damage. To ensure a perfect fit at race speeds and temperatures, the engine components are heated when the engine is assembled; pre-heated liquid must be circulated around the engine to return it to this temperature before engineers start it. Several heating cycles are performed in the garage before the car runs to ensure it’s up to temperature before leaving the pit.

- Tyres – Counter-intuitively, once the tyres have warmed up, the driver needs to drive faster (up to a point) when cornering so that down-force can come into play and allow safer negotiation of turns, which can be made up to 90° (or more) without slowing. F1 cars can corner at 3 to 6 times gravity (G) and brake up to 5-7 Gs. To put that into perspective, the maximum acceleration of the space shuttle was just 3.5 Gs, while fighter jets top out around 8 or 9 Gs. Tyres rotate 50 times a second and are designed to last 90 to 120 kilometres (normal road car tyres last 60,000 to 100,000 kilometres).

- Chassis – There’s a wooden plank under each car that prevents the cars from riding too low (and benefiting aerodynamically). After each race, the plank is inspected, and if worn more than 1 extra millimetre, the car is disqualified. Only 4 times in the entire history of Formula 1 have all cars starting the race completed the entire race without anyone dropping out.

- Speed – Despite having less horsepower and a lower top speed, the lower weight of an F1 car (despite its 80,000 components) means it’s much faster than a Buggati Veyron. Over a certain speed — around 100 mph — F1 cars generate more down-force than their weight, so at that point, they can drive upside down (on the ceiling of a tunnel).

- Shifting – Drivers typically change gear up to 2,800 times per Grand Prix, but at circuits like Monaco, this number can increase to 4,000 times.

- Braking – If an F1 has been using brakes heavily and suddenly spins and comes to a stop, the lack of airflow over the brakes can cause them to spontaneously burst into flame (as brake discs can exceed temperatures of 1,000°C). At 300 kilometres per hour (~185 mph) lifting off the gas (increasing aerodynamic drag without applying brakes) will decelerate the car faster than slamming on the brakes would for an “average” road car. In fact, the F1 creates deceleration forces comparable to a regular car driving through a brick wall.

- Driving – The airflow over the drivers’ helmets generates enough lift to stretch their necks. F1 drivers endure physical training that would make an Olympic triathlete tremble (running, swimming, skiing, weights) just to handle the extreme forces generated. One documentary on the Red Bull team claimed they train by keeping their pulse around 200 beats per minute for 2 hours. This also helps them handle the unbearably high temperatures (50 °C) inside the cockpit. (Drivers can lose 2-4 kilograms of weight through prolonged exposure to high G-forces and perspiration, particularly in more humid events.) The car’s cockpit is so tight it requires the driver to remove the steering wheel in order to get in or out.

Animals

Animals Animation

Animation Art of Playing Cards

Art of Playing Cards Drugs

Drugs Education

Education Environment

Environment Flying

Flying History

History Humour

Humour Immigration

Immigration Info/Tech

Info/Tech Intellectual/Entertaining

Intellectual/Entertaining Lifestyles

Lifestyles Men

Men Money/Politics/Law

Money/Politics/Law New Jersey

New Jersey Odds and Oddities

Odds and Oddities Older & Under

Older & Under Photography

Photography Prisons

Prisons Relationships

Relationships Science

Science Social/Cultural

Social/Cultural Terrorism

Terrorism Wellington

Wellington Working

Working Zero Return Investment

Zero Return Investment